Why You Can’t Trust a Chatbot to Talk About Itself

Why You Can’t Trust a Chatbot to Talk About Itself

Chatbots are programmed to provide information and engage in conversations with users, but they lack the ability to truly understand…

Why You Can’t Trust a Chatbot to Talk About Itself

Chatbots are programmed to provide information and engage in conversations with users, but they lack the ability to truly understand and convey their own identity.

Unlike humans, chatbots do not have personal experiences, emotions, or beliefs that shape their self-perception. They are designed to follow pre-set responses based on algorithms and data.

Chatbots may claim to have certain traits or characteristics, but these are merely scripted lines created by developers to make them seem more human-like.

Without self-awareness or authenticity, chatbots cannot provide genuine insights into their own capabilities, limitations, or intentions.

Furthermore, chatbots are prone to errors and misunderstandings, leading to unreliable information about themselves.

Users should be cautious when interacting with chatbots that claim to speak about their own qualities or motivations, as these claims may not be accurate or sincere.

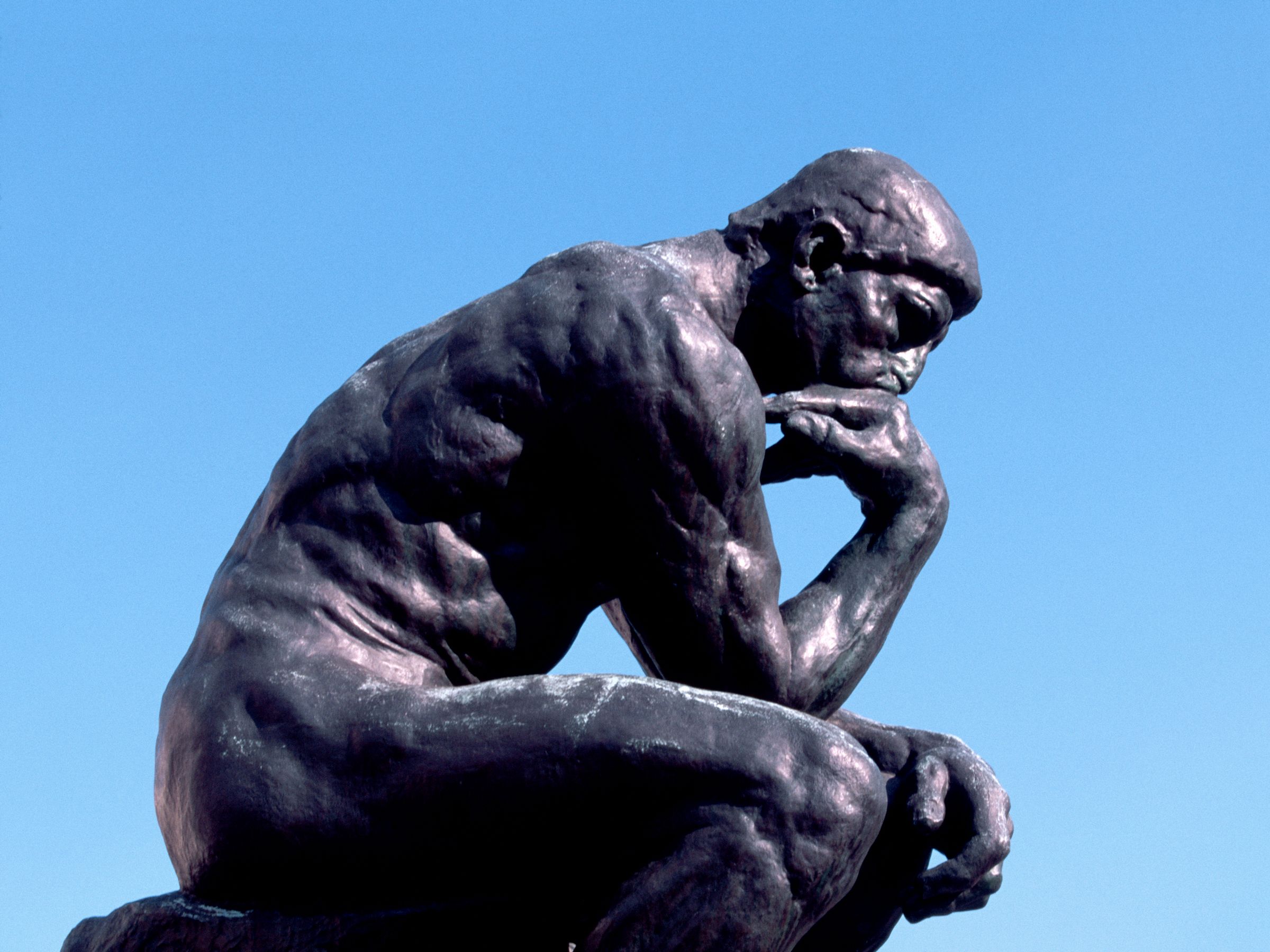

Ultimately, trusting a chatbot to talk about itself is like trusting a mirror to accurately reflect your true self – it can only show a distorted and incomplete image.

While chatbots can be helpful for certain tasks and functions, they are not capable of providing authentic and reliable information about themselves.

It is important for users to approach chatbot interactions with a critical mindset and to be aware of the limitations of artificial intelligence in self-expression.

In conclusion, chatbots may be proficient in performing specific functions, but they should not be trusted to accurately represent or speak about themselves.